The Best Way to Review Contracts Is Humans & AI

AI contract review allows people to negotiate contracts faster, cheaper, and with better results. In this partnership of people + AI: let AI do what it does best and let people do what they do best.

I’m the chief data scientist at LegalSifter, a company that combines artificial intelligence (AI) and expertise to help with contracts.

One of our products, LegalSifter Review, helps people review and negotiate contracts. It deploys “Sifters”—AI algorithms trained to read text, look for specific concepts, and provide advice. Clients and prospects often ask us questions about our AI approach, including:

- When people use LegalSifter Review: What do the Sifters do? What do people do? And why?

- How accurate are our Sifters?

- How are we different from other AI contract review tools?

The purpose of this article is to describe our AI approach and answer these questions.

A Partnership: People + AI

We believe that contract review works best when people use AI. Combining the strengths of people and AI allows you to negotiate faster, cheaper, and with better results.

Our Sifters are fast—they can read and interpret thousands of pages in seconds. They’re also perceptive—they can spot, and flag for review, subtle nuances in written expression that people might otherwise miss. And they’re consistent—they don’t get tired, forget, or lose focus.

But AI alone isn’t enough. That’s why people are in the driver’s seat.

Who Does What?

Our guiding principle is that someone using AI should always outperform someone who isn’t using AI. Most people would intuitively agree with this—it seems obvious. But finding the balance between people and AI can be challenging. What if AI does too little, and isn’t helpful? What if AI tries to do too much, but does it poorly?

This is a question about boundaries and AI system design. In a partnership between people and AI: who does what? We have decided that the best approach is to let AI do what it does best and let people do what they do best.

What Sifters Do

So we use Sifters to find provisions and extract information from them. Our Sifters are trained to read through documents and look for a specific concept. They learn from experience and improve over time. They can find specific provisions, and they can also extract structured information (such as dates, party names, or other information) from text. They do it lighting fast and consistently.

And when a Sifter identifies a provision of interest, the Sifter also offers advice, to help users assess the significance of that text. It’s either advice we create or advice users develop based on their internal best practices (including perhaps their playbooks) or with the help of outside counsel.

We use the phrase “Combined Intelligence™️” to describe this combination of AI and human expertise. Our Sifters find provisions of interest (using AI) and deliver expert advice (written by human experts). This allows our users to get expert, in-context advice as they review their contracts.

What People Do: Apply Reasoning

People can’t beat Sifters for speed and consistency. But people are the clear winners when it comes to applying reasoning and using world knowledge.

In some cases, information isn’t directly stated in the text, but it could be inferred through reasoning. For example, a user knows that a contract was signed by both parties on 25 March 2020. But the contract says, “This agreement is effective on 1 January 2020”—for better or worse, it’s routine for contracts to be backdated—and it also says, “The initial term of this agreement is for one year.” When does the contract terminate? AI systems called “expert systems” try to sort through fragmentary information of this sort to reach appropriate conclusions, but they tend to perform erratically. By contrast, a human reviewer would have no problem determining that the signing date is irrelevant, that the effective-date provision is relevant, and that one year from the effective date is 1 January 2021.

That’s why we have people take the lead on tasks that require reasoning.

What People Do: Assess Context, and Make Edits

Sifters look for relevant text and offer advice. But we don’t let Sifters automatically edit and redline contracts. We think that this is a task for people—a task that requires human intelligence, and contextual knowledge to do safely.

We could add in an “auto-redline” algorithm, but it could be risky: redlines are often dependent on the specific context of the negotiation. What if the algorithm was trained on a data set that is irrelevant to the current negotiation? What if your negotiating position needs to change based on party relationships, business pressures, or deal-specific factors? The algorithm might make irrelevant or bad edits. And the user might put too much trust in the algorithm, and fail to think critically about the edits being made.

So instead of automatically editing contracts, our Sifters tell the user what’s in the contract, and they offer relevant advice. This advice includes negotiating guidelines and preferred language. The user then determines what edits to make, based on the context of the negotiation.

What Sets Our AI Apart?

Our goal is to meet 100% of your AI needs out of the box, with thousands of high-quality Sifters ready for immediate use. There are other AI-and-contracts companies—each with their own focus and AI approach—but we believe that we stand out in three areas.

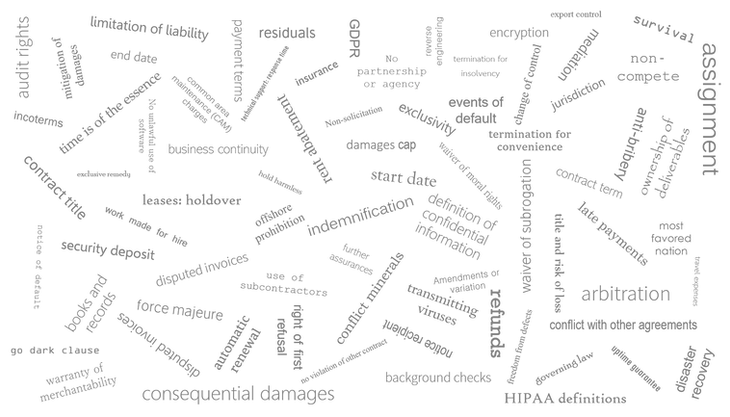

First, we have developed more than 1,800 Sifters covering a broad range of contract types, including ordinary-course commercial contracts (leases, contracts for the purchase of software, service, or goods, confidentiality agreements, and more), as well as more specialized areas (information security, shipping, data privacy, data processing, and more). Our clients can use them immediately, without custom training.

Second, we have unrivaled expertise. Building a Sifter requires understanding all the ways a concept might be expressed in a contract. That’s why Ken Adams, our chief content officer, is in charge of building the specifications for our Sifters. Ken has established an international reputation as the leading expert on contract language, and he’s the author of the groundbreaking A Manual of Style for Contract Drafting, published by the American Bar Association. Once Ken has signed off on the specifications for a Sifter, our Legal Annotation and Data Science teams take over. We have a rigorous AI development process, and our Sifters currently average 96-97% F1 upon release.

And third, we aim for continuous improvement. Sifters can make mistakes—just like humans make mistakes. When clients notice mistakes, they can tell us via our “Sifter Trainer” feedback system. This system allows our Sifters to learn and improve each week, based on feedback from all of our clients. Our Sifters never forget, and they never lose focus. They continually learn and improve.

Questions?

We hope you find this helpful. If you’d like to learn more about our company or our products, please visit https://www.legalsifter.com/ or contact us at help@legalsifter.com.